Designing Actionable Insights

EdTech

Dashboards

Information Architecture

RBAC

DataViz

Senior UX Designer

Context

What the Product Does

Actionable Insights at a Glance

The product should allow educators, administrators, and caregivers to view data for their students at various levels of depth, and provide actionable insights when necessary.

The Problem to Solve

Users weren’t sure how to utilize the data they were looking at.

After interviewing 48 users, 24% of them expressed confusion as to what to do next. Analytics supported this with low CTR on dashboards and high support tickets. This indicated the experience that the design was currently providing was too dense, required users to reference too much to view the data, and lacked a clear “next step” for users.

The Impact of My Solution

-

Data Clarity

Data comprehension improved by 38%

Table readability scores improved by 40%

Time spent locating information down

-

Actionable Insights

Time to identify learning gaps reduced by 45%

Report exports reduced by 31%

Enabled classroom-to-student-level tracking with one click

-

Engagement & Satisfaction

User engagement by 62%

Satisfaction (CSAT) from 72% → 93%

Improved educator confidence in data-driven decisions

How this Solution was Developed

Continue scrolling or select to jump to that section

Research and Insights

-

28 unique screens reviewed; 74 UI inconsistencies identified.

This inconsistency stemmed from a lack of a defined design system.

-

8 stakeholders interviewed (product, engineering, curriculum, and support)

Feedback indicated a history of issues with this product.

-

126 user feedback submissions analyzed.

36% made some mention of the core issue of this case study.

-

In addition to support tickets, of 48 teachers interviewed, we found that 24% expressed confusion in what to do with the data they were given.

Some felt the data was too dense, providing information when they didn’t need it.

-

21% decrease in usage after initial login (based on usage analytics).

In tangent with the interviews, this cemented the issue as a problem with “what do I do next?”.

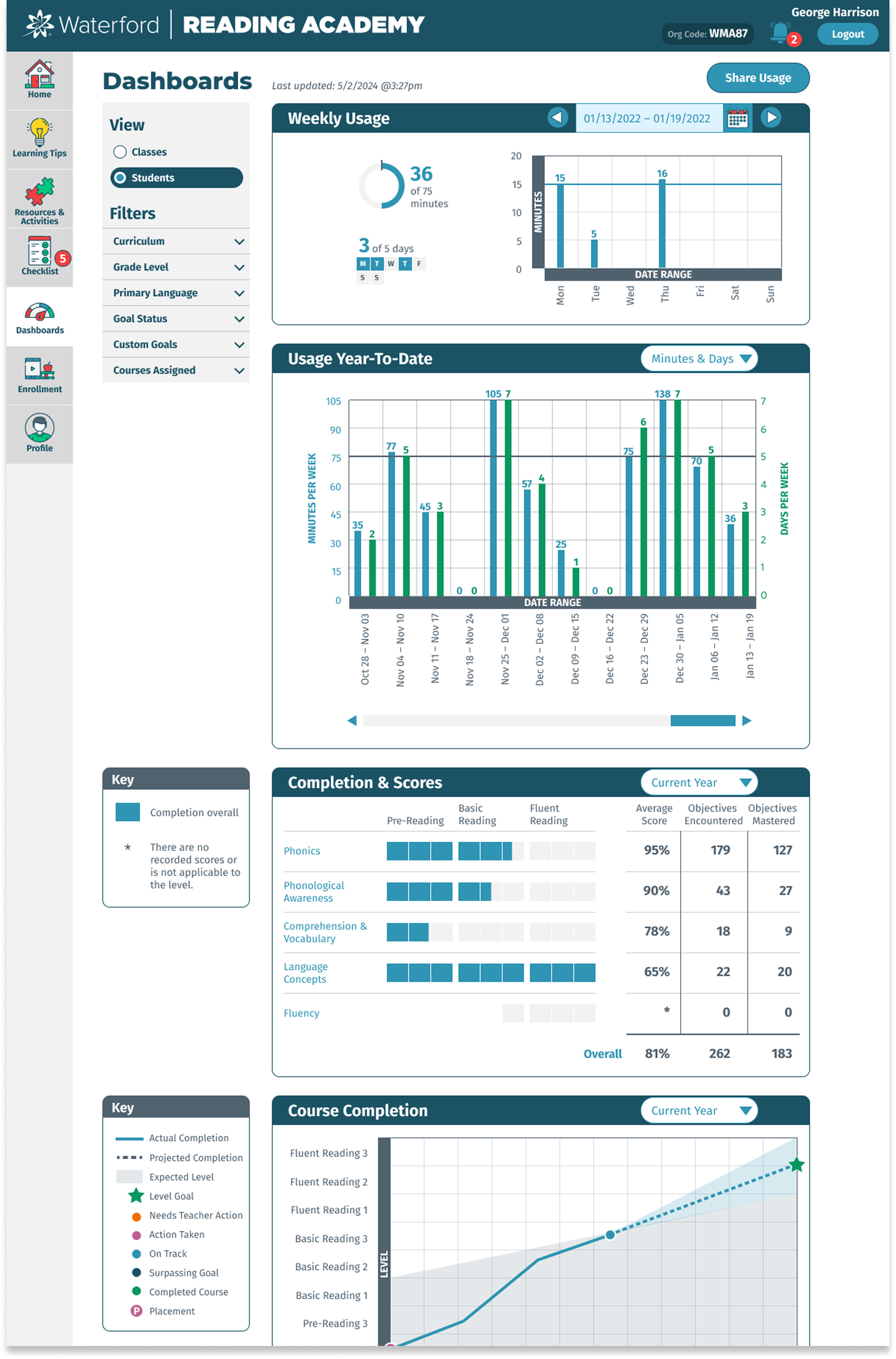

Existing Design Review

Detailed Review

A detailed review of the current experience confirmed what was discovered in the research phase and allowed me to establish what design principles would guide the process.

Design Principles

Clarity over Density

Simplify data views. Teachers don’t need school-wide data, and principals don’t need district wide usage data. Make each view focused on what the user needs.

Actionable UI

Make it clear what was being looked at. Design calls to action when necessary. Make what happens after CTA’s are clicked predictable and simple to follow through.

Role-Based Simplicity

Remove unnecessary options and cognitive overhead.

Consistency through Design Systems

I led the design system efforts for the shared front-end library. This was a perfect place to implement a modular card-based component I had been working on with another designer.

Wireframe and Whiteboxes

Communicating Changes

Making sure everyone understands why designs choices are made is an integral part of the process.

Checking with Developers

Even though we were using a design system that was already approved by developers, I still meet with the engineers working on this to ensure all aspects of the design are still within their constraints.

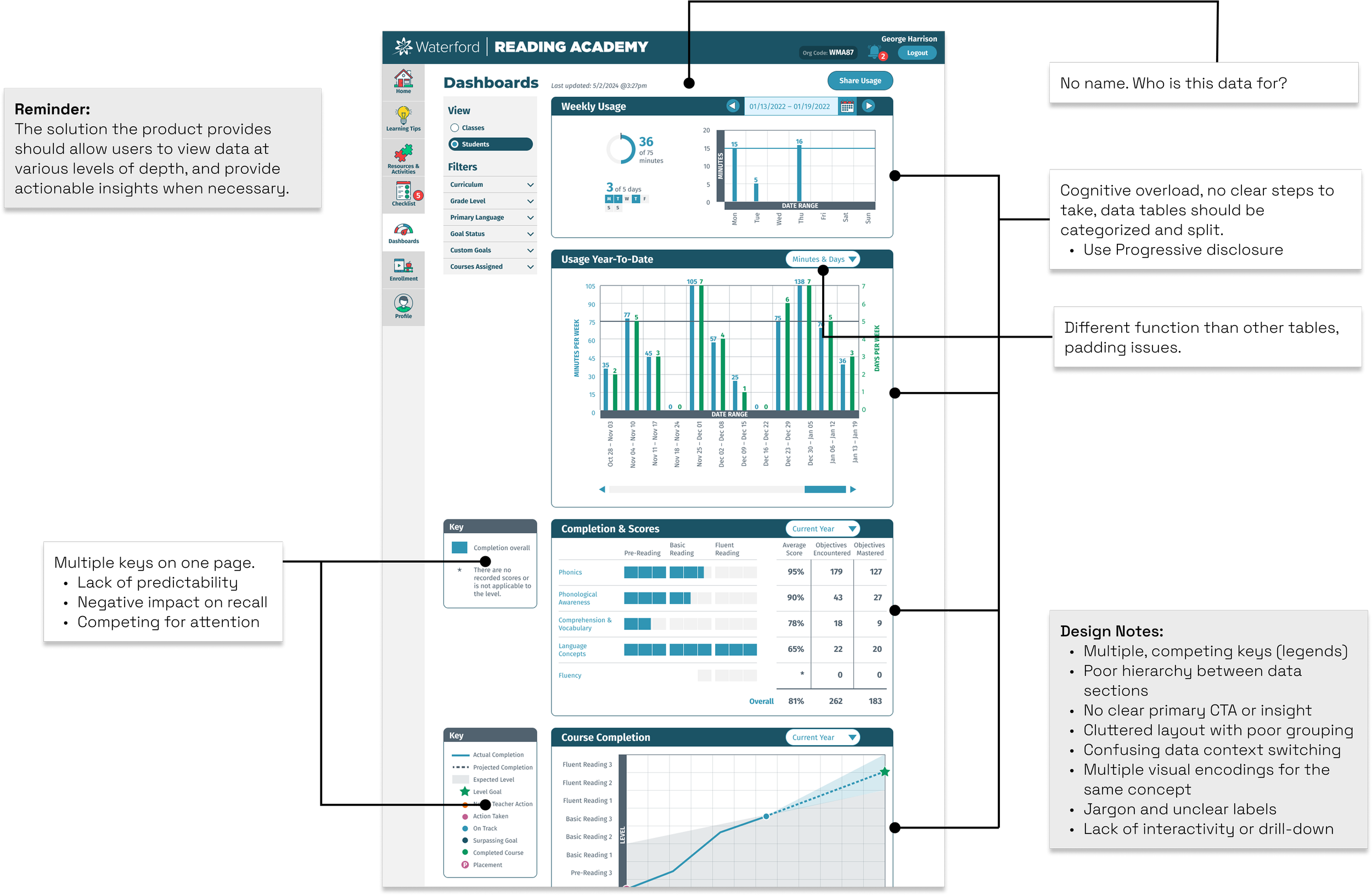

Updated Design

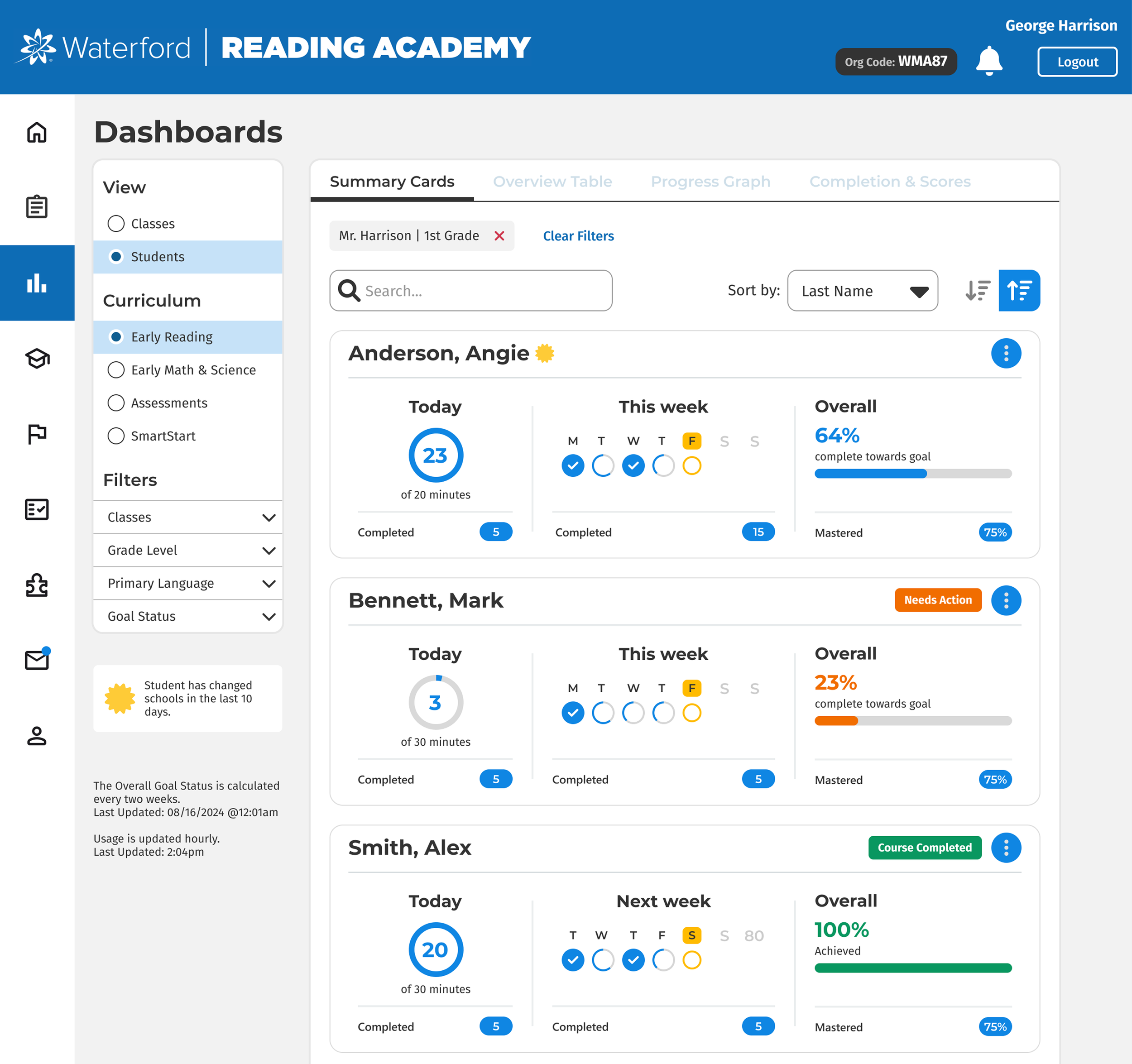

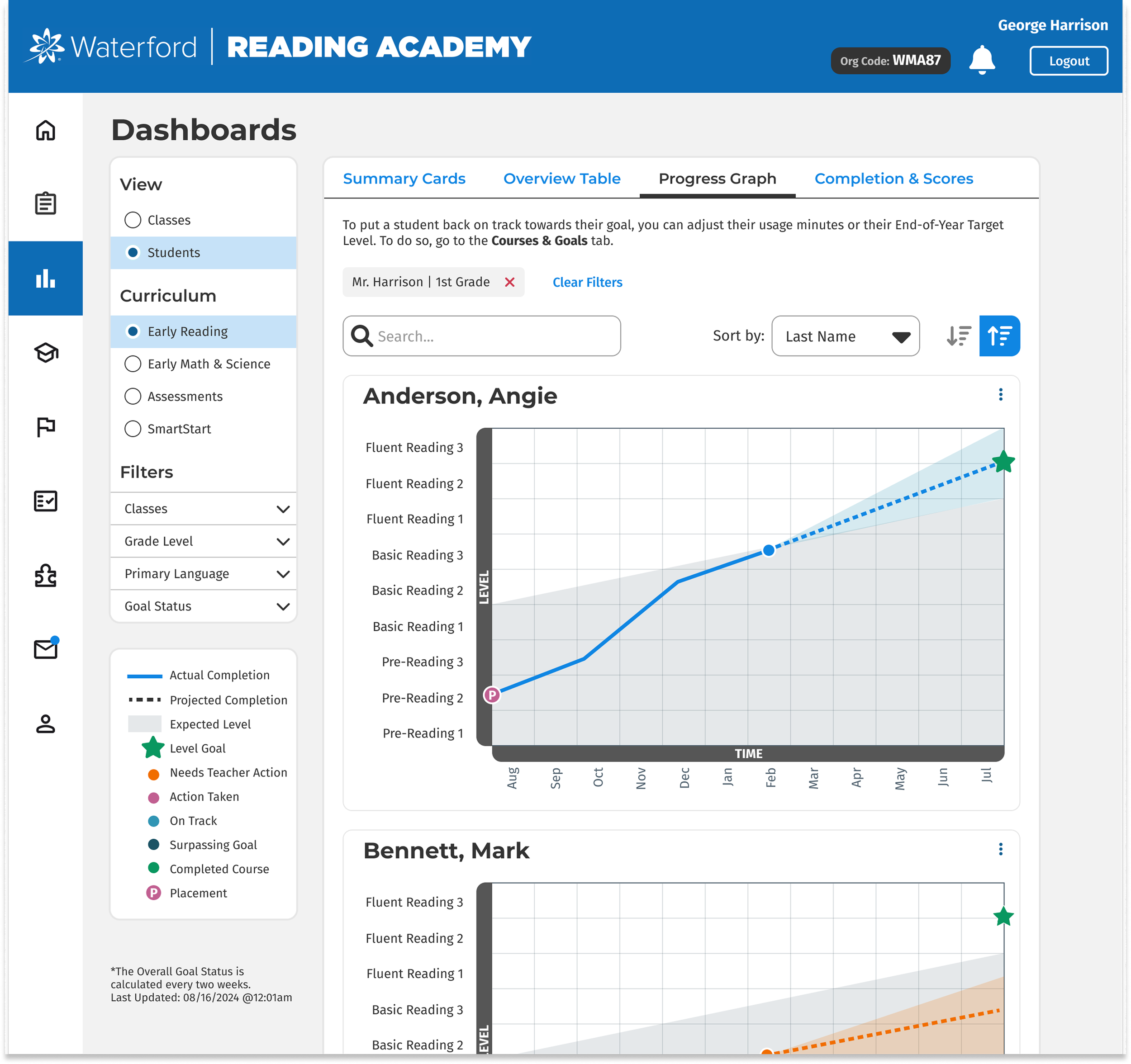

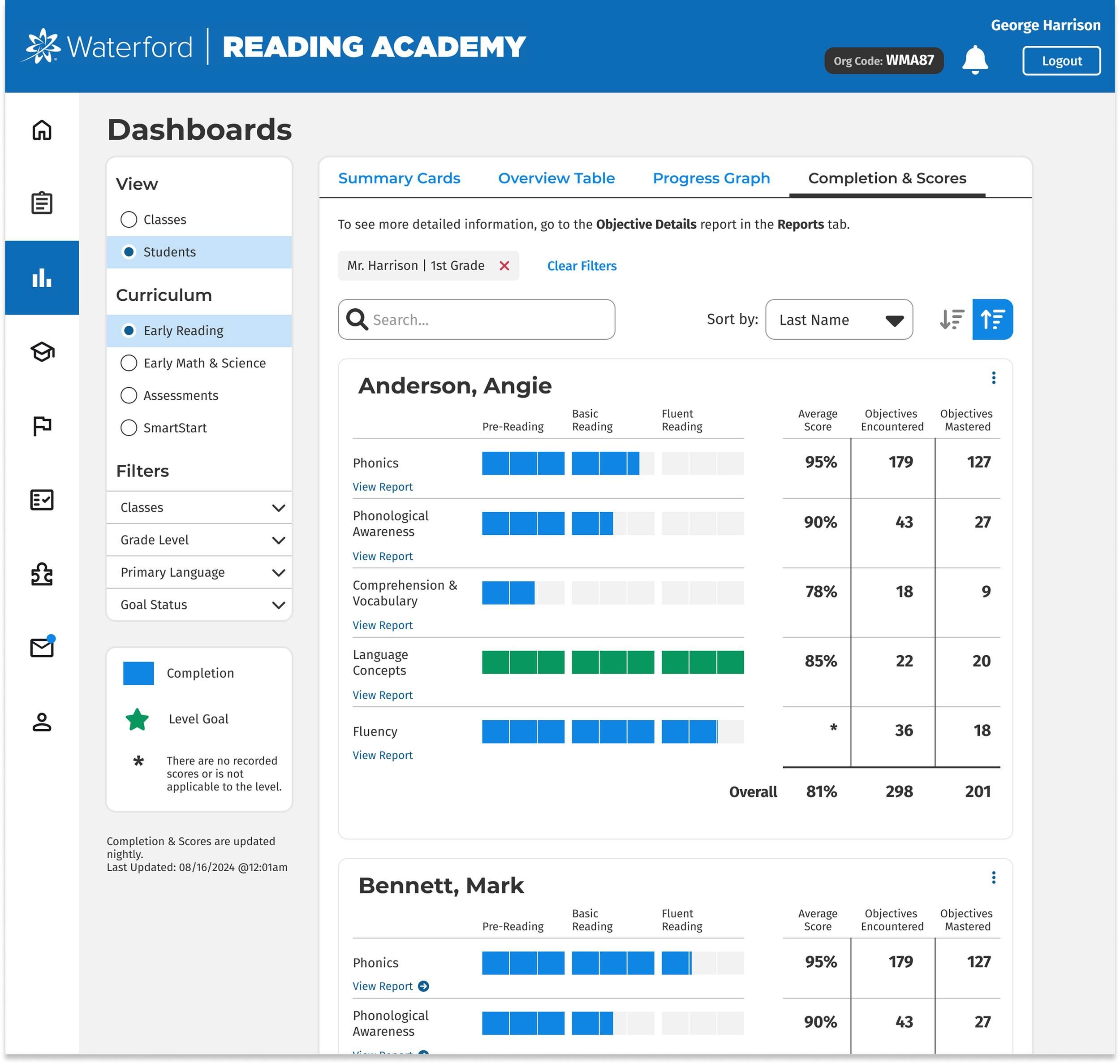

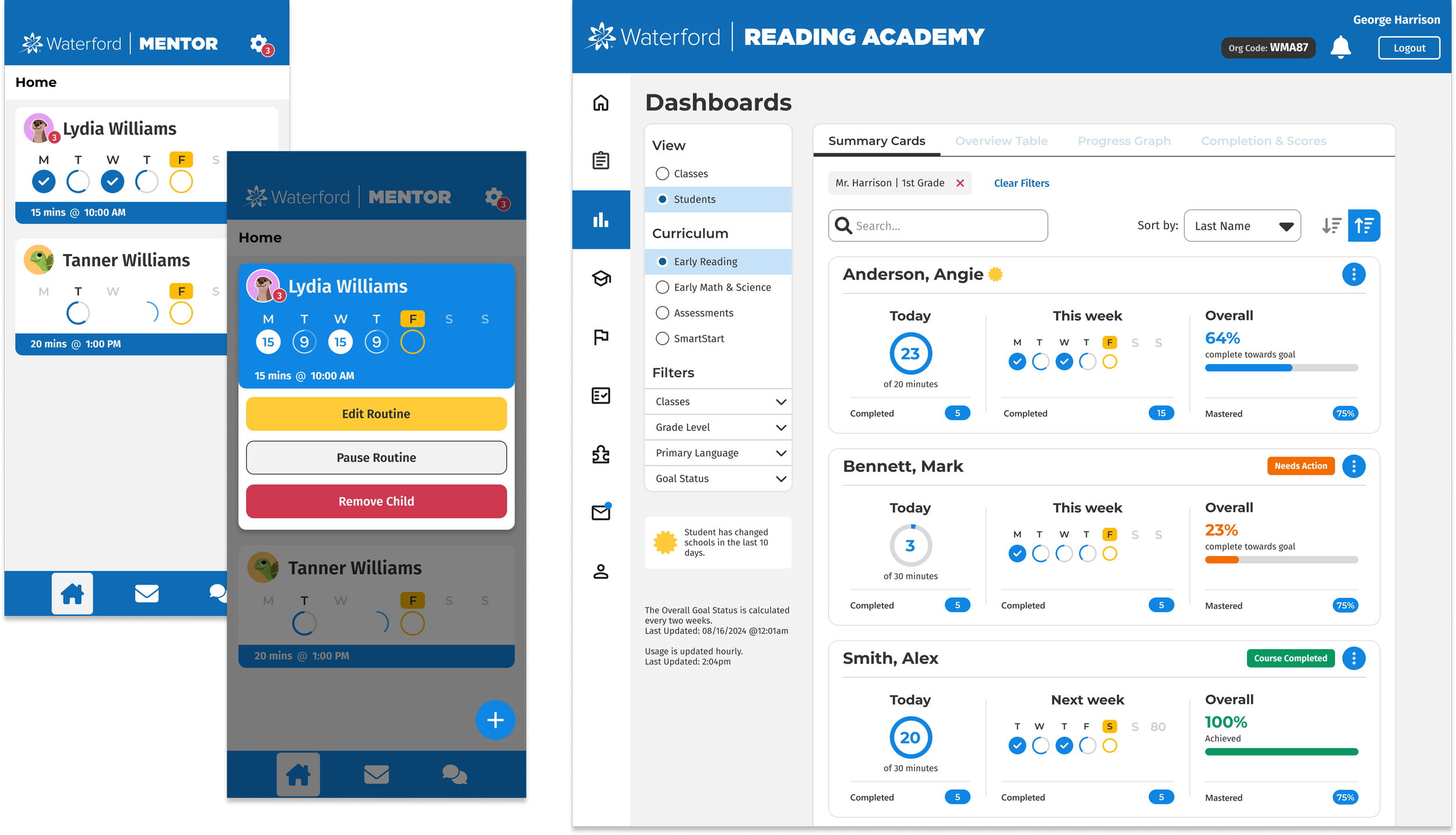

Pages and Tabs

Reducing cognitive load by breaking different data sets and tables into different tabs at the top, allow users to view the set of data when they need it

Filtering and Search

Quick filters allow users to narrow down data sets into the criteria they’re looking for. Search available for specific names of students.

Data Labels

Clear labeling around the cards presented users with a summary of a student’s progress. This view also carried over into:

School-wide classroom metrics

District-wide school metrics

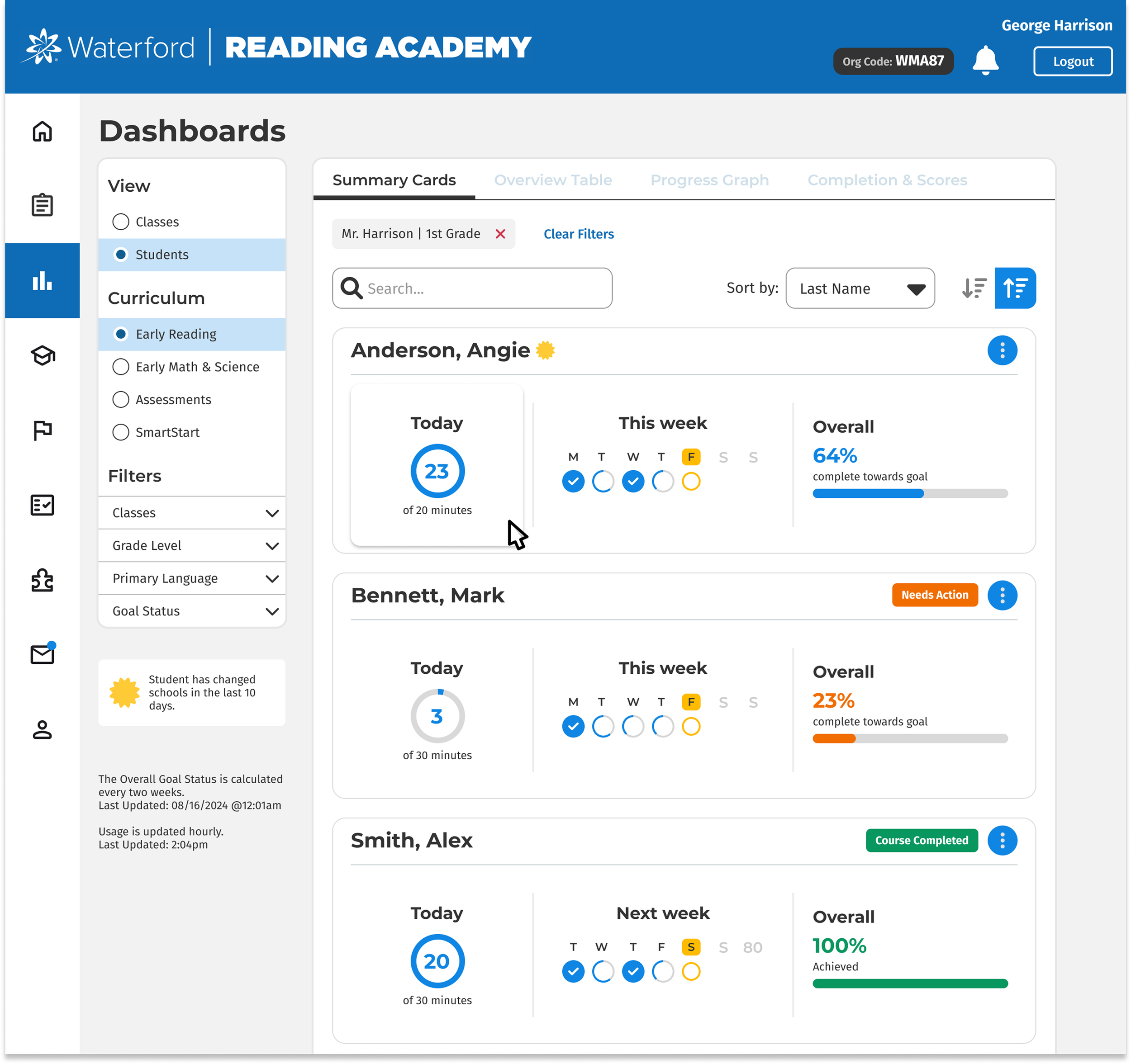

Testing and Iterating

While the redesign was met with very positive reception, there were still a few tweaks to be made.

Testing indicated that users in the education domain were looking for an at-a-glance insight into objective completion. This was added in to save them from opening the completion and scores tab for quick data like this.

Scaling and Predictability

Visually Similar

Parents using the Mentor app can effectively communicate with teachers because the way student progress is shown is identical to what teachers would see.

Speedy Development

Because this was used in the main dashboard screen, development for this parent-facing application was smooth and quick.

Key Decisions

Incremental Update?

Even though this would have allowed us to roll out updates sooner, this path would create a wildly inconsistent experience when it was already struggling.

For this reason, I moved forward with a total overhaul, testing with users as I designed, rather than change over time.

Accessibility Considerations

The charts use many different colors, so it was important to design with different visual impairments in mind.

In addition to the colors, symbols were adjusted to be more prominent in new data visualizations, along with an updated legend.

Desktop or Mobile?

While I typically design mobile first, nearly all of our users of this platform use the web version via desktop.

The choice to start with a desktop version was to ensure it got into the hands of the majority of our users before adapting it to mobile.

Reflection

-

The collaboration between product and engineering was surprisingly smooth. There wasn’t a feature that couldn’t be adapted into the new design, and the design system work beforehand really played into the speedy success of this project.

-

In the parent-facing mobile application, there was a requirement to change the colors of the student's name bar to reflect which subject they were enrolled in. This disrupted the color scheme and introduced a number of new WCAG issues. I ended up using iconography to resolve this instead of the color change, but it took some convincing.

-

I think I would have changed the tables a little more than what I ended up doing. I was trying to keep key aspects of the experience similar enough that users wouldn’t have to completely re-learn how to read the data, but I think there are some improvements down the road. Maybe this was the best approach.